Simon Jenner

Wednesday 1 June 2022

We built a Typeform competitor using no-code and share our journey to landing the first 100 paying customers. Learn insights and strategies from the process.

Posted in:

No-Code

We are building and iterating in public, so this blog post will be updated each time we make an iteration.

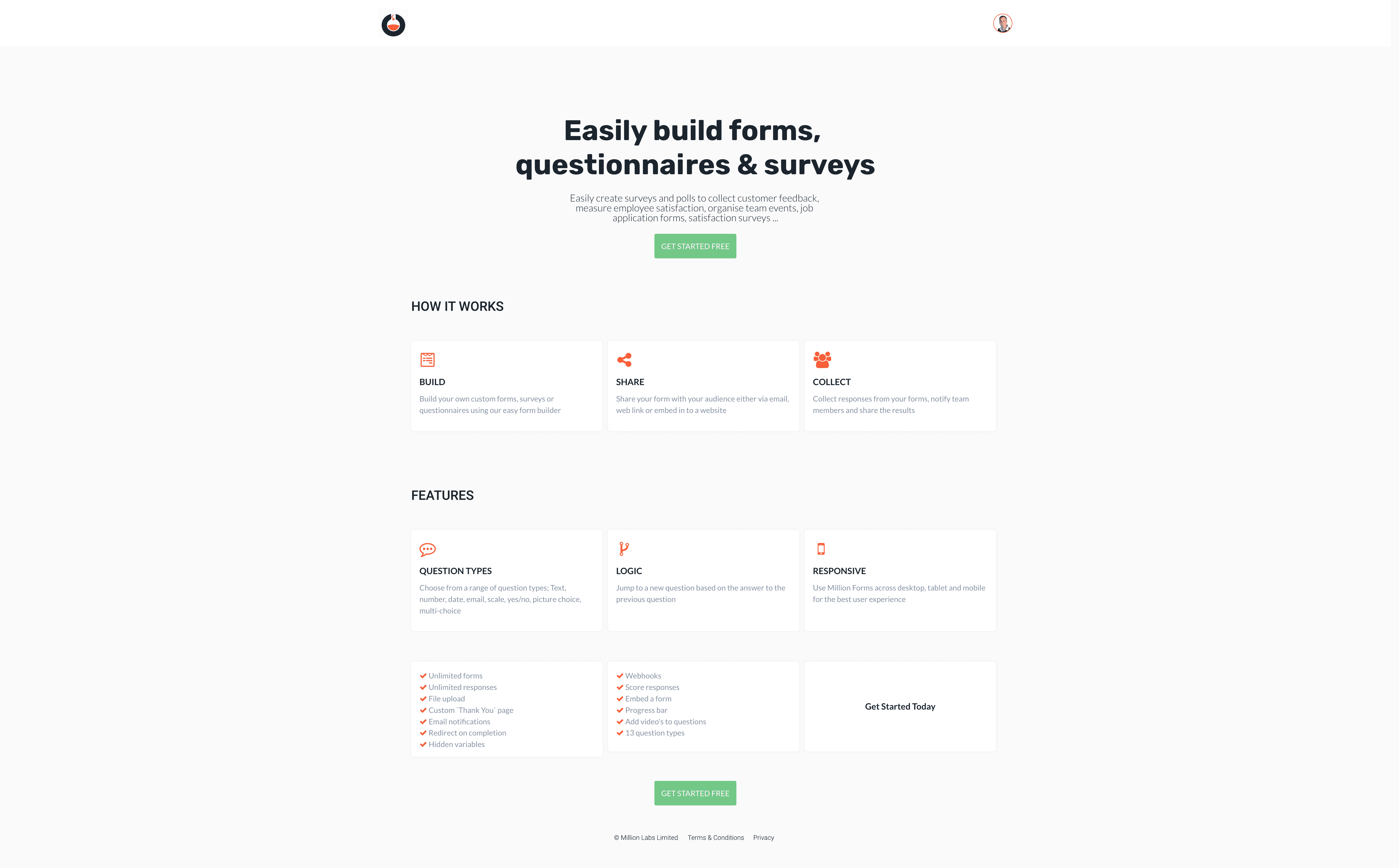

Sometime last year we had a requirement for a forms product but with some specific requirements so rather than go use an off the shelf solution like Google Forms or Typeform we decided to build our own (millionforms.com).

We have been using it internally for about 6-months now, so its not a Minimum Viable Product (MVP) because the forms side is quite functional but as an overall app its pretty basic. We built it using Bubble.io and have probably put in 200 hours of work into the product (we typically scope an MVP at 100hrs work).

The app has no payment function, just a landing page, signup and then the forms functionality.

Million Labs works with Startup Founders from all over the world to launch no-code Minimum Viable Products so we have lots of lessons learn't along the way. We always tell founders to start with a basic product and test the market, then iterate rather than trying to build something that isn't an MVP. Given that is our advice we have tried to do that and here is what happened.

With each test or iteration of the product we are testing hypothesis, gathering data then planning the next test.

1st Test

Test duration: 7 days

Hypothesis 1 - Will people use the product, is it functional and meets their form requirements

Hypothesis 2- We can acquire customers at a reasonable Cost of Acquisition (CoA). Looking at other products in the market they charge somewhere between $20-$30 per month. So if we can acquire for less than $20 then we will make money in month 1 of that customer i.e. We charge $20 per month per customer, CoA is $10 therefore we make $10 in month 1.

The test for this was simple, we start and run a google ads campaign against our landing page. We measure the following:

How many clicks through to the landing pageCost per clickHow many people create a user accountCost of all ads/signed up users = CoAHow many users create a formHow many forms get at least 1 response

1st Test Results

We ran the google ad for 2 weeks and here are the results:

5158 clicks (click through rate CTR of 8.84%)Cost per click £0.03pUser accounts created = 853Cost of ads £150.62 / 853 = 17p CoA853 (all of them)156 forms have >1 response

Another stat:The 853 users created 1071 forms

1st Test Conclusion

As a 1st test I would say these results are amazing and certainly not what was expected. The cost of acquisition was great if that can be sustained, more on this later.

Hypothesis 1 - will people use the product? we didn't get any feedback (however when I check the site, we have no email or any contact details on it so that maybe why) but we did get people using it, creating forms and sending forms out for responses. So I would say hypothesis 1 is met - people will use it.

Hypothesis 2 - Can we acquire customers for a reasonable CoA, is half proved. While the CoA was very low (17p) we were giving the product away (FREE) and it said this in the advert on Google.So what we have proven is we can give away something for free that cost us 17p + running costs. But this nicely feeds into our 2nd test.

2nd Test

Test duration: 7 days

Hypothesis 3 - Will customers upgrade from Free to pay a one-off fee for the product of $5

To test this we need to do a bunch of development work. Here is a list of that work:

Install StripeConfigure a way for the customer to pay (checkout flow)Configure a way of tracking which customer is on what pricing plan (leave the current 800+ customers on the free plan but any new customer are on the $5 plan)Restrict access once a form gets 25 responses - to force the user to a paid planRestrict the number of forms to 1 for free and force upgrade when adding the 2nd formUpdate landing page with pricing

My estimate is that this is about 4hrs work. Turned out it didn't take that long, probably closer to 2hrs.

2nd Test Results

The test ran for 7 days but I only ran the Google ad for 24hrs because as you can see we were not short of clicks and signups and I figured the test is about further down the funnel i.e. getting users to pay.

Here is a video I made half way through the test with some reflections.

The total cost of the test was £17.05 for the 487 clicks.

2nd Test Conclusion

I adapted the test mid-week to get more layers in the funnel for greater insight but this didn't alter the test so its still valid (see video above for details). The two areas of friction in the funnel are activating of the email address after signup and getting responses to a form. I suspect the challenge with forms getting responses is not a Million Forms product issue but a factor of time, it takes a while for people to promote their form and start to get responses.

I will move onto test 3 but the test 2 cohort will continue to run so we will see in the next couple of weeks if we get more forms with responses.

3rd Test

Test duration: 1.5 days

Hypothesis 4 - Customers will pay a one-off fee for the product of $5 on signup

To test this we need to do a bit of development work. Here is a list of that work:

Move Stripe payment to signup processUpdate landing page with no mention of freeRemove free from the adverts in Google ads

This development work took approximately 20mins to complete and test.

3rd Test Results

After the first 24hrs we had 28 signups but no paid. So we updated the onboarding to show a pricing screen after signup and before the checkout, in the hope this explained what they got for their $5.

3rd Test Conclusion

Zero paid they all dropped straight off as soon as they hit the checkout, so 100% lost out of the funnel.

4th Test

Test duration: 4hrs

Hypothesis 5 - $5 feels too cheap and therefore people thinks its a scam, so maybe raising the price to $10 may convert.

4th Test Results

I stopped this test after 4hrs because the results were looking similar i.e. no conversion to paid. Half way through the test I looked at the users that were signing up to see if I could get a feel of the user demographics from the email address i.e. were they corporate emails, were they male/female and what was there likely origin based on their name (not scientific but would give me a feel). Based on this quick check it looked to me that they were mostly from Africa or India.

As a result of this finding I updated the app to capture the browser timezone location when users signed up. I then captured location data on 14 users, 6 from Africa & 8 from Asia. This therefore matched by theory from just looking at the email addresses.

4th Test Conclusion

Based on the demographic of the users clicking on the ads and signing up the data suggest that any price other than free is a blocker. It's likely that Google ads learn't that people in those geographies were clicking on the ads and therefore served it up more (the campaign is set to optimise for clicks). So the data from all the previous tests is probably not valid. For test 5 we will limit where the ads are shown.

5th Test

Test duration: 3 days

Hypothesis 6 - Excluding geographies from the Google Ads will improve the chances that a user will convert at $10.

5th Test Results

This was a trial and error test, every time a new user signed up but didn't pay we checked the location and excluded it from the Google ads location list. We ended up removing most of sub saharan Africa and a few countries in Asia. This means the results are peppered with signups that were highly unlikely to convert.

However, we had a customer from the UK signup and pay $10. WE MADE REVENUE!!! From zero to first revenue in 3 weeks of iteration.

5th Test Conclusion

This test showed us a Cost of Acquisition (CoA) of £7.16 or about $10, therefore we are at breakeven if we could continue to acquire at that cost. In theory we should be able to get this cost down because the test included quite a lot of non filtered locations.

6th Test

Test duration: 7 days

Hypothesis 7 - Will customers upgrade from Free to pay a one-off fee for the product of $10.

This is a repeat of Test 2 but with the locations excluded on the ads so we have the right demographic.

6th Test Results

No revenue from this test but some good data that we can action to improve the funnel.

6th Test Conclusion

We clearly have an activation problem with the email (only 31%), on doing a bit more digging we realised that we were still using the Bubble standard email function and not the authenticated SendGrid account. This is likely why lots of emails were going into spam, so we changed this to our paid for SendGrid account, hopefully this will improve the deliverability of the activation emails and bring that % up to near 100%.

We also came across a slight anomaly in activated accounts where we mark it as activated if they log in a 2nd time, but a couple of users had activated while logged in and created a form then never logged in again so it was shown as not activated. This was fixed with a backend workflow to check for activated users each night and mark them as activated.

Looking at the data in more detail it looks like none of the forms had more that 2 questions added. This suggests that although earlier tests had shown people generating usable forms this cohort of users were struggling to build the form they wanted. This could point towards usability UI/UX issues.

For the next test we will install hotjar to capture video's of actual usage which may help identify any UI/UX issues.

7th Test

Test duration: 7 days

Hypothesis 8 - User are not generating forms because the UI is too confusing?

The budget for ads has been increased from £10 per day to £12.50 to speed things up, hence the test is for less time. Google estimates this will provide 28 more clicks per week, so if we are running it for 4 days that should provide 16 extra clicks.

Changes for this test include, activation emails are now sent via a verified sendgrid sender and hotjar is installed for tracking users activity.

7th Test Results

2 days into the test, the results were not great. 6 signups and only 1 activation. This is where hotjar really helps, a quick look at one of the video's showed the user using mobile. While the home page and the signup are mobile responsive the dashboard and form builder is not and therefore is pretty much unusable on mobile. To be honest this is not a service for mobile users, the forms can be completed on mobile but not administered. The creation of forms is too complicated to be done on mobile.

So this is an oversight (a bit like the locations), the ads shouldn't be showing for people on mobile. I therefore updated the Google ads to remove mobile.

Here is the breakdown prior to the change, as you can see most of the traffic was coming from Mobile.

Here is the change to prevent it from showing to mobile users.

It has now run for 7 days and we had another paying customer (hurrah, 2 down 98 to go). Here are the results

7th Test Conclusion

The numbers have been dropping with each test, however the quality of clicks has been improving so this is a good thing. I believe we now have the right demographic who are most likely to pay for the product. Based on this last test our Cost of Acquisition is £90, so charging $10 isn't going to work. Therefore we have a choice we can either try and get the CoA down (realistically we can probably get this down to £30 with some work) or we can increase the price.

We looked at the paying client to see if we could see anything in the data as to why they upgraded to a paid plan. What we found was a bug, somewhere in adding the paid features we had set the forms to be in-active when created and the button to activate the form was not hooked up (so you couldn't activate your form). So our guess is the customer upgraded to see if that allowed them to activate their form, so not really a normal use case. This also means that none of the other signups were able to use the product, so basically this test was voided by the bug.

This shows how important testing is, ok we only wasted £90 with the test but we also wasted 1 week which in Startup land is the more costly thing.

The plan is to repeat this test with a working product to see if we get better results.

8th Test

Test duration: 7 days

Hypothesis 9 - User were not generating forms because of the bug

8th Test Results

As the data was coming in we looked at the hotjar videos to try and get insight into what users are actually doing.

1st video shows the user never figures out how to create a question in there form so bails Hotjar video

2nd video, the user creates a multichoice question then adds a second one then just bails, no idea why? hotjar video

Here is the funnel for this test

8th Test Conclusion

We have reached the stage that most Startup founders face, its feels like a dead end. We have a funnel we can see where the blockage is i.e. Creating a form, the top of the funnel is ok, of course it can be improved but it is workable.

We have reached the point where the data really doesn't tell us much, the Hotjar video's are super helpful for spotting obvious things, like a button doesn't work but often leaves you with more questions than answers. Why did the user just bail before they created a from?!!!

We have reached the time where the only solution is we really need to speak to some of these customers and get direct feedback. We now have enough users of the right demographic to send out an email to try and get some feedback.

Test 9 will be an email to the users.

9th Test

Sometime last year we had a requirement for a forms product but with some specific requirements so rather than go use an off the shelf solution like Google Forms or Typeform we decided to build our own (millionforms.com).

We have been using it internally for about 6-months now, so its not a Minimum Viable Product (MVP) because the forms side is quite functional but as an overall app its pretty basic. We built it using Bubble.io and have probably put in 200 hours of work into the product (we typically scope an MVP at 100hrs work).

The app has no payment function, just a landing page, signup and then the forms functionality.

Million Labs works with Startup Founders from all over the world to launch no-code Minimum Viable Products so we have lots of lessons learn't along the way. We always tell founders to start with a basic product and test the market, then iterate rather than trying to build something that isn't an MVP. Given that is our advice we have tried to do that and here is what happened.

With each test or iteration of the product we are testing hypothesis, gathering data then planning the next test.

1st Test

Test duration: 7 days

Hypothesis 1 - Will people use the product, is it functional and meets their form requirements

Hypothesis 2- We can acquire customers at a reasonable Cost of Acquisition (CoA). Looking at other products in the market they charge somewhere between $20-$30 per month. So if we can acquire for less than $20 then we will make money in month 1 of that customer i.e. We charge $20 per month per customer, CoA is $10 therefore we make $10 in month 1.

The test for this was simple, we start and run a google ads campaign against our landing page. We measure the following:

How many clicks through to the landing pageCost per clickHow many people create a user accountCost of all ads/signed up users = CoAHow many users create a formHow many forms get at least 1 response

1st Test Results

We ran the google ad for 2 weeks and here are the results:

5158 clicks (click through rate CTR of 8.84%)Cost per click £0.03pUser accounts created = 853Cost of ads £150.62 / 853 = 17p CoA853 (all of them)156 forms have >1 response

Another stat:The 853 users created 1071 forms

1st Test Conclusion

As a 1st test I would say these results are amazing and certainly not what was expected. The cost of acquisition was great if that can be sustained, more on this later.

Hypothesis 1 - will people use the product? we didn't get any feedback (however when I check the site, we have no email or any contact details on it so that maybe why) but we did get people using it, creating forms and sending forms out for responses. So I would say hypothesis 1 is met - people will use it.

Hypothesis 2 - Can we acquire customers for a reasonable CoA, is half proved. While the CoA was very low (17p) we were giving the product away (FREE) and it said this in the advert on Google.So what we have proven is we can give away something for free that cost us 17p + running costs. But this nicely feeds into our 2nd test.

2nd Test

Test duration: 7 days

Hypothesis 3 - Will customers upgrade from Free to pay a one-off fee for the product of $5

To test this we need to do a bunch of development work. Here is a list of that work:

Install StripeConfigure a way for the customer to pay (checkout flow)Configure a way of tracking which customer is on what pricing plan (leave the current 800+ customers on the free plan but any new customer are on the $5 plan)Restrict access once a form gets 25 responses - to force the user to a paid planRestrict the number of forms to 1 for free and force upgrade when adding the 2nd formUpdate landing page with pricing

My estimate is that this is about 4hrs work. Turned out it didn't take that long, probably closer to 2hrs.

2nd Test Results

The test ran for 7 days but I only ran the Google ad for 24hrs because as you can see we were not short of clicks and signups and I figured the test is about further down the funnel i.e. getting users to pay.

Here is a video I made half way through the test with some reflections.

The total cost of the test was £17.05 for the 487 clicks.

2nd Test Conclusion

I adapted the test mid-week to get more layers in the funnel for greater insight but this didn't alter the test so its still valid (see video above for details). The two areas of friction in the funnel are activating of the email address after signup and getting responses to a form. I suspect the challenge with forms getting responses is not a Million Forms product issue but a factor of time, it takes a while for people to promote their form and start to get responses.

I will move onto test 3 but the test 2 cohort will continue to run so we will see in the next couple of weeks if we get more forms with responses.

3rd Test

Test duration: 1.5 days

Hypothesis 4 - Customers will pay a one-off fee for the product of $5 on signup

To test this we need to do a bit of development work. Here is a list of that work:

Move Stripe payment to signup processUpdate landing page with no mention of freeRemove free from the adverts in Google ads

This development work took approximately 20mins to complete and test.

3rd Test Results

After the first 24hrs we had 28 signups but no paid. So we updated the onboarding to show a pricing screen after signup and before the checkout, in the hope this explained what they got for their $5.

3rd Test Conclusion

Zero paid they all dropped straight off as soon as they hit the checkout, so 100% lost out of the funnel.

4th Test

Test duration: 4hrs

Hypothesis 5 - $5 feels too cheap and therefore people thinks its a scam, so maybe raising the price to $10 may convert.

4th Test Results

I stopped this test after 4hrs because the results were looking similar i.e. no conversion to paid. Half way through the test I looked at the users that were signing up to see if I could get a feel of the user demographics from the email address i.e. were they corporate emails, were they male/female and what was there likely origin based on their name (not scientific but would give me a feel). Based on this quick check it looked to me that they were mostly from Africa or India.

As a result of this finding I updated the app to capture the browser timezone location when users signed up. I then captured location data on 14 users, 6 from Africa & 8 from Asia. This therefore matched by theory from just looking at the email addresses.

4th Test Conclusion

Based on the demographic of the users clicking on the ads and signing up the data suggest that any price other than free is a blocker. It's likely that Google ads learn't that people in those geographies were clicking on the ads and therefore served it up more (the campaign is set to optimise for clicks). So the data from all the previous tests is probably not valid. For test 5 we will limit where the ads are shown.

5th Test

Test duration: 3 days

Hypothesis 6 - Excluding geographies from the Google Ads will improve the chances that a user will convert at $10.

5th Test Results

This was a trial and error test, every time a new user signed up but didn't pay we checked the location and excluded it from the Google ads location list. We ended up removing most of sub saharan Africa and a few countries in Asia. This means the results are peppered with signups that were highly unlikely to convert.

However, we had a customer from the UK signup and pay $10. WE MADE REVENUE!!! From zero to first revenue in 3 weeks of iteration.

5th Test Conclusion

This test showed us a Cost of Acquisition (CoA) of £7.16 or about $10, therefore we are at breakeven if we could continue to acquire at that cost. In theory we should be able to get this cost down because the test included quite a lot of non filtered locations.

6th Test

Test duration: 7 days

Hypothesis 7 - Will customers upgrade from Free to pay a one-off fee for the product of $10.

This is a repeat of Test 2 but with the locations excluded on the ads so we have the right demographic.

6th Test Results

No revenue from this test but some good data that we can action to improve the funnel.

6th Test Conclusion

We clearly have an activation problem with the email (only 31%), on doing a bit more digging we realised that we were still using the Bubble standard email function and not the authenticated SendGrid account. This is likely why lots of emails were going into spam, so we changed this to our paid for SendGrid account, hopefully this will improve the deliverability of the activation emails and bring that % up to near 100%.

We also came across a slight anomaly in activated accounts where we mark it as activated if they log in a 2nd time, but a couple of users had activated while logged in and created a form then never logged in again so it was shown as not activated. This was fixed with a backend workflow to check for activated users each night and mark them as activated.

Looking at the data in more detail it looks like none of the forms had more that 2 questions added. This suggests that although earlier tests had shown people generating usable forms this cohort of users were struggling to build the form they wanted. This could point towards usability UI/UX issues.

For the next test we will install hotjar to capture video's of actual usage which may help identify any UI/UX issues.

7th Test

Test duration: 7 days

Hypothesis 8 - User are not generating forms because the UI is too confusing?

The budget for ads has been increased from £10 per day to £12.50 to speed things up, hence the test is for less time. Google estimates this will provide 28 more clicks per week, so if we are running it for 4 days that should provide 16 extra clicks.

Changes for this test include, activation emails are now sent via a verified sendgrid sender and hotjar is installed for tracking users activity.

7th Test Results

2 days into the test, the results were not great. 6 signups and only 1 activation. This is where hotjar really helps, a quick look at one of the video's showed the user using mobile. While the home page and the signup are mobile responsive the dashboard and form builder is not and therefore is pretty much unusable on mobile. To be honest this is not a service for mobile users, the forms can be completed on mobile but not administered. The creation of forms is too complicated to be done on mobile.

So this is an oversight (a bit like the locations), the ads shouldn't be showing for people on mobile. I therefore updated the Google ads to remove mobile.

Here is the breakdown prior to the change, as you can see most of the traffic was coming from Mobile.

Here is the change to prevent it from showing to mobile users.

It has now run for 7 days and we had another paying customer (hurrah, 2 down 98 to go). Here are the results

7th Test Conclusion

The numbers have been dropping with each test, however the quality of clicks has been improving so this is a good thing. I believe we now have the right demographic who are most likely to pay for the product. Based on this last test our Cost of Acquisition is £90, so charging $10 isn't going to work. Therefore we have a choice we can either try and get the CoA down (realistically we can probably get this down to £30 with some work) or we can increase the price.

We looked at the paying client to see if we could see anything in the data as to why they upgraded to a paid plan. What we found was a bug, somewhere in adding the paid features we had set the forms to be in-active when created and the button to activate the form was not hooked up (so you couldn't activate your form). So our guess is the customer upgraded to see if that allowed them to activate their form, so not really a normal use case. This also means that none of the other signups were able to use the product, so basically this test was voided by the bug.

This shows how important testing is, ok we only wasted £90 with the test but we also wasted 1 week which in Startup land is the more costly thing.

The plan is to repeat this test with a working product to see if we get better results.

8th Test

Test duration: 7 days

Hypothesis 9 - User were not generating forms because of the bug

8th Test Results

As the data was coming in we looked at the hotjar videos to try and get insight into what users are actually doing.

1st video shows the user never figures out how to create a question in there form so bails Hotjar video

2nd video, the user creates a multichoice question then adds a second one then just bails, no idea why? hotjar video

Here is the funnel for this test

8th Test Conclusion

We have reached the stage that most Startup founders face, its feels like a dead end. We have a funnel we can see where the blockage is i.e. Creating a form, the top of the funnel is ok, of course it can be improved but it is workable.

We have reached the point where the data really doesn't tell us much, the Hotjar video's are super helpful for spotting obvious things, like a button doesn't work but often leaves you with more questions than answers. Why did the user just bail before they created a from?!!!

We have reached the time where the only solution is we really need to speak to some of these customers and get direct feedback. We now have enough users of the right demographic to send out an email to try and get some feedback.

Test 9 will be an email to the users.

9th Test

Ready to launch your startup idea with an MVP?

Download our step by step guide for non-technical founders to create a startup Minimum Viable Product (MVP)

Get the eBook